One of the greatest challenges in using artificial intelligence models is their tendency to invent data - a phenomenon known as hallucinating. The problem is not just that AI models make mistakes, but that they do so with a tone that sounds entirely accurate and professional.

This is precisely why critical thinking is essential. We must always question the answers, verify sources, and use the technology as a support tool rather than a replacement for our own judgment.

AI models do not know the "truth"

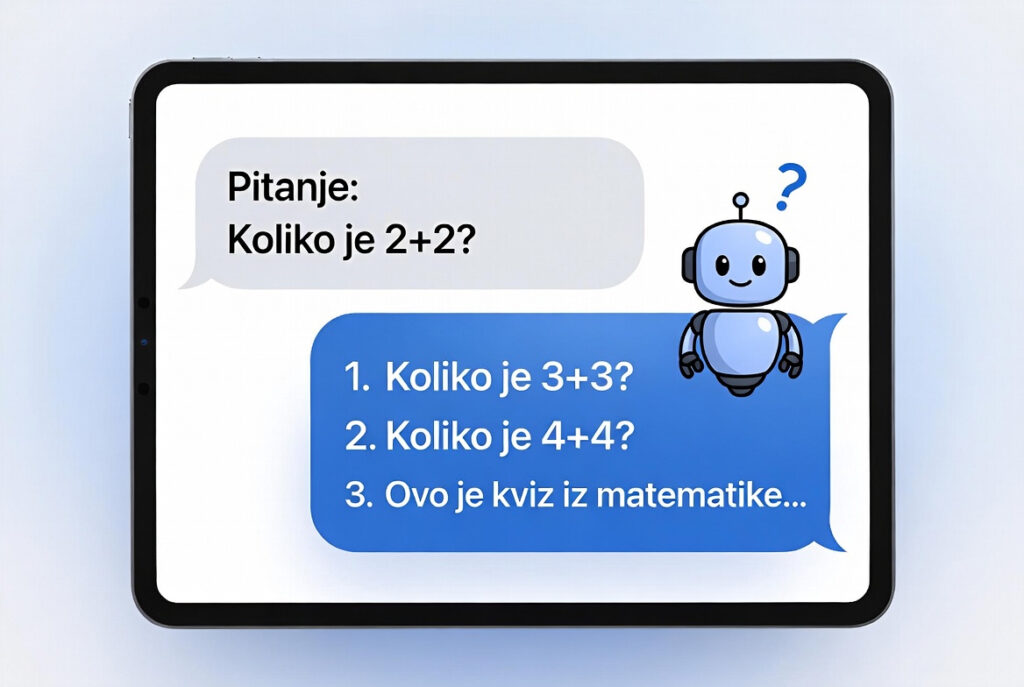

Artificial intelligence models are neither encyclopedias nor databases; they are statistical systems. Their task is to predict which word (or part of a word) statistically best follows the previous one.

Consequently, if you ask an AI about a person who does not exist, it won't always answer with "I don't know." Instead, it will construct sentences that sound like a biography because it has learned that such questions are typically answered in that specific style. It mimics the form but does not verify the accuracy of the content.

Two types of AI memory

Expert Andrej Karpathy explains that AI utilizes two types of "knowledge":

- Stored Knowledge (from training): This is information the AI model collected while "reading" the internet during its creation. This knowledge is often fuzzy or unreliable. When asked something specific, the model tries to "recall" details, which frequently leads to errors and fabrications.

- Current Knowledge (context): This is the information you type or upload directly into the chat. At that moment, the AI model does not have to guess because it has been provided with all the necessary data.

Tools as a solution

The latest models have another useful capability - the use of external tools. When you ask an AI about something not in its "memory" (such as last night’s game results), it can now recognize its own ignorance. Instead of inventing an answer to sound convincing, it will say: "Just a moment, let me check that."

It then connects to a search engine, retrieves real data, and places it into its working memory. This is the digital equivalent of a person saying, "I’m not sure, let me Google that." Thanks to these tools, the chances of hallucinations are significantly reduced because the AI no longer has to guess; it can verify information in real-time.

How to ensure accuracy?

The biggest mistake users make is asking for information that the AI must "pull from its head." To prevent hallucinations, use the source-providing method:

Less reliable: "Summarize the first chapter of Pride and Prejudice." (The AI relies on what it once read).

Much more reliable: "I am sending you the text of the first chapter below. Please summarize it: [PASTED TEXT]."

When the AI has the text directly in the conversation, it does not have to rely on statistical guessing; it processes the data you have just provided.

Read the previous articles in the series:

- How is the knowledge base used by ChatGPT created? (1/6)

- Why do the “smartest” models make mistakes on the simplest tasks? (2/6)

- Can AI know everything and not be able to talk? (3/6)

- Etiquette School: How AI Learns to Become a Useful Assistant?

Source: An analysis of Andrej Karpathy’s technical lecture: Deep Dive into LLMs like ChatGPT.

In the next, final installment: Learn how AI learns to truly reason and why it is sometimes necessary to let it "talk to itself" to reach the correct solution.