So far, we have learned how AI models are built from massive amounts of data and how they learn the rules of conversation by mimicking humans. Newer models, such as the OpenAI o1 or DeepSeek R1 series, represent a major shift: they no longer just mimic human responses but have begun to solve logical problems independently. Andrej Karpathy explains this leap through the third and most advanced stage of development: Reinforcement Learning (RL). Reinforcement Learning – RL).

The Three Stages of AI Schooling

Karpathy uses a schooling analogy to explain this technological progress:

- Čitanje udžbenika (prethodno treniranje): Model upija golemo znanje s interneta, ali ga još ne zna koristiti u razgovoru.

- Gledanje rješenja (SFT): Model promatra kako ljudi rješavaju zadatke i pokušava ih kopirati. Tako nastaju standardni asistenti koji ne mogu biti pametniji od čovjeka kojeg imitiraju.

- Zadatci za vježbu (RL): Ovo je faza u kojoj model samostalno rješava probleme. Baš kao učenik koji vježba zadatke na kraju poglavlja, AI pokušava tisuće različitih pristupa dok sam ne dođe do točnog rezultata.

Internal Monologue: The AI that "Talks to Itself"

The greatest innovation of this phase is the development of an internal monologue (Chain of Thought). The model discovered on its own that "thinking out loud" increases its accuracy. Before providing a final answer, it checks its own steps in the background: "Wait, this looks wrong… let’s try another method… let’s check one more time… Ah, now it makes sense."

This behavior wasn't programmed; rather, the system learned that breaking a problem down into smaller steps and acknowledging its own mistakes leads to success.

Transcending Human Limitations

While older models relied exclusively on imitation, new models can surpass human intuition. Karpathy compares this to the historic moment when AlphaGo made a brilliant move in the game of Go—a move no human would have ever played. In education, this signifies a transition from tools that merely assemble sentences to tools capable of solving the most difficult logical tasks.

The Future: From "Chat" to Digital Agents

What does tomorrow hold? Karpathy predicts two major shifts:

- AI dobiva oči i uši (multimodalnost): Modeli će nativno vidjeti i čuti svijet oko sebe, od analize tona glasa predavača do uočavanja pogrešaka u video snimkama eksperimenata.

- Digitalni agenti: AI više neće biti pasivan sugovornik, nego agent koji samostalno izvršava dugotrajne zadatke (npr. planiranje cijelog izleta, komunikacija s prijevoznicima i unos u kalendar).

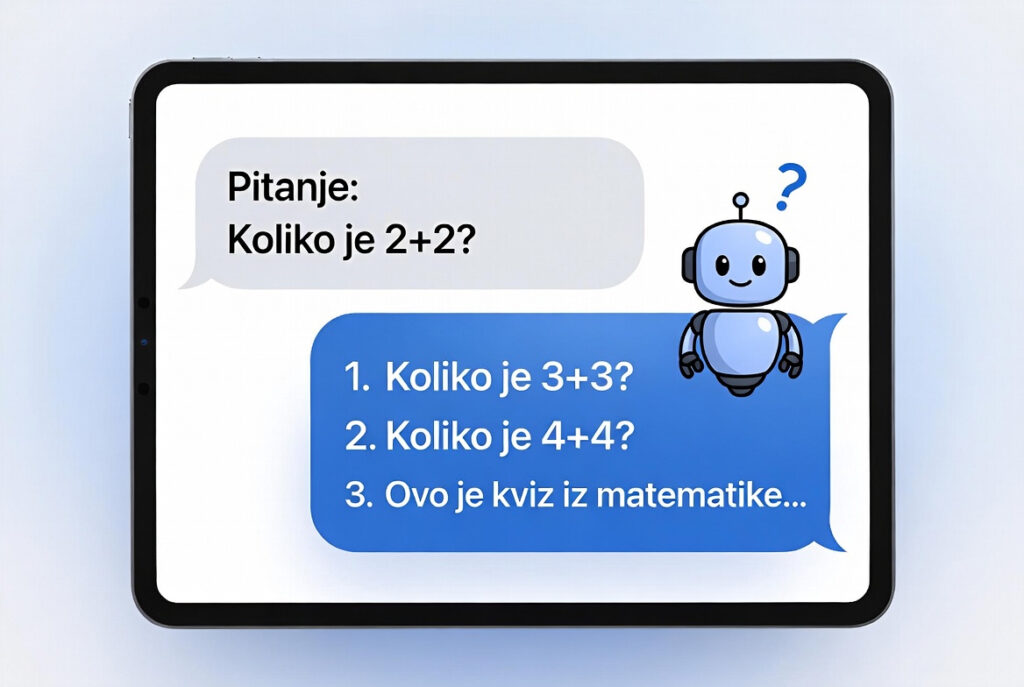

Limitations of AI Systems

Despite their power, these systems are still full of unpredictable gaps. An AI might solve a Mathematical Olympiad problem and then fail a trivial question like: "Is 9.11 greater than 9.9?"

Savjet za kraj: Koristite umjetnu inteligenciju kao moćan motor, ali volan uvijek držite u svojim rukama. Neka vam AI služi za inspiraciju i nacrte, ali vi ostanite “glavni urednik” koji donosi konačnu odluku.

Read the previous articles in the series:

- How is the knowledge base used by ChatGPT created?

- Why do the “smartest” models make mistakes on the simplest tasks?

- Can AI know everything and not be able to talk?

- Etiquette School: How AI Learns to Become a Useful Assistant?

- The Phenomenon of AI Hallucinations

Source: An analysis of Andrej Karpathy’s technical lecture: Deep Dive into LLMs like ChatGPT.