Using a process called RLHF (Reinforcement Learning from Human Feedback), large AI models are being made less biased and safer, but at the same time, they can reduce the models’ creativity. In practice, while these AI models are getting better at avoiding offensive or risky content, they are also losing the ability to generate a wide range of new or diverse ideas

The fact that AI chatbots and text generators are safer can accidentally make them more boring. The more designers work to remove bias or risky content from AI, the less likely it is to come up with new and interesting answers. This means that users and companies need to choose the right kind of AI for the job: creative but riskier for brainstorming and writing, or safer but more repetitive for customer service or sensitive contexts.

What did we learn?

• Safety comes at a price: Methods like RLHF that filter out unwanted biases and toxic content work well, but they also make models less creative. Researchers define creativity as a model’s ability to use a wide range of language structures and express diverse ideas.

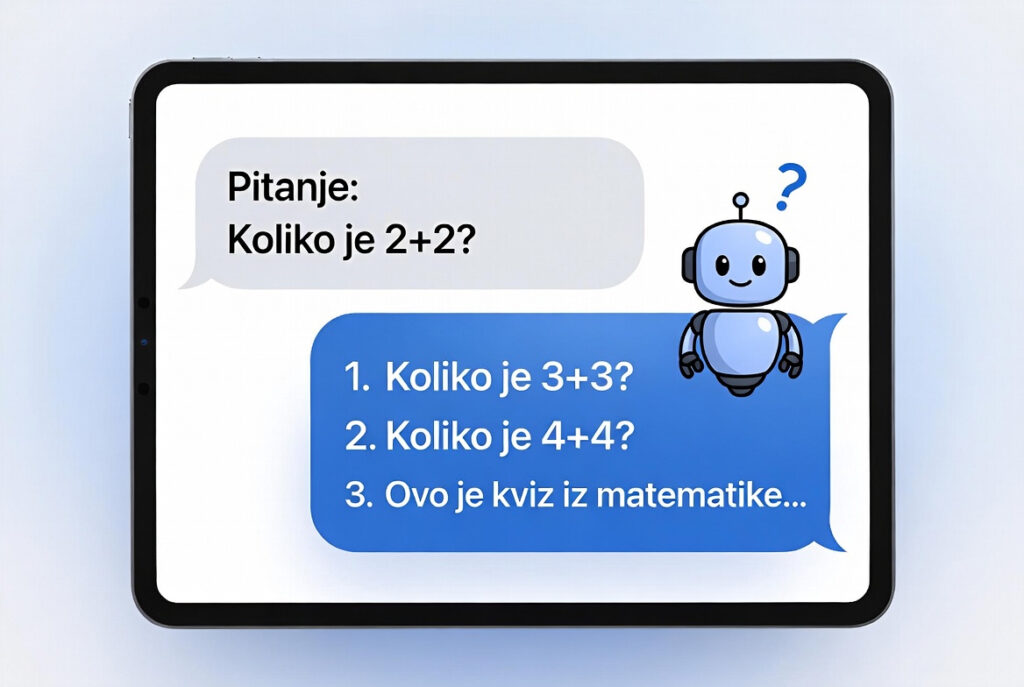

• Reduced diversity: The paper describes three experiments comparing “base” AI models (unaligned) with those made safer by RLHF (“aligned” models). Aligned models generate less diverse names, backgrounds, text styles, and product reviews. For example, when creating fictional customer profiles, aligned models repeatedly use the same names and personalities, while base models show much greater diversity.

• Models get “stuck”: Research finds that safer AI models tend to fall into a kind of rut, sticking to a few “safe” templates or options even when asked to be creative. This is called “mode collapse,” where the model is locked into only a small set of possible responses.

• A trade-off for businesses and users: Safe and consistent AI models are best for situations like customer support or content moderation. More creative and less filtered models are preferable for tasks.