The first and by far most expensive stage of creating an AI language model, which we call “pre-training,” does not produce the kind of useful assistant (like ChatGPT) we use today. The result of that first stage is the so-called base model.

Experts like Andrej Karpathy describe this form of AI very simply: it is not a smart conversationalist, but just an “extremely expensive text completion system.”

Although this model has “read” the entire Internet and possesses a vast amount of knowledge, it still cannot talk to humans at this stage.

The “Zip File” of the Internet: Knowledge as Statistics

To understand the nature of the base model, Karpathy offers a brilliant analogy: imagine it as a compressed digital archive of the entire Internet. In its hundreds of billions of parameters, the model has condensed statistical patterns from an incredible 15 trillion basic language units (tokens).

However, it is important to understand that this islossy compression.The model does not memorize texts from the Internet verbatim, like a digital encyclopedia or database, but learns the style of expression. It does not store facts as fixed records, but as statistical probabilities. Therefore, it cannot “cite” the Internet exactly, but generates text that sounds like something that could be found online.

Why does the basic model not participate in a conversation?

The main reason why the basic language model cannot be a useful assistant is that it does not understand the concept of conversation. It is not programmed to help, but to continue the beginning of a series of words.

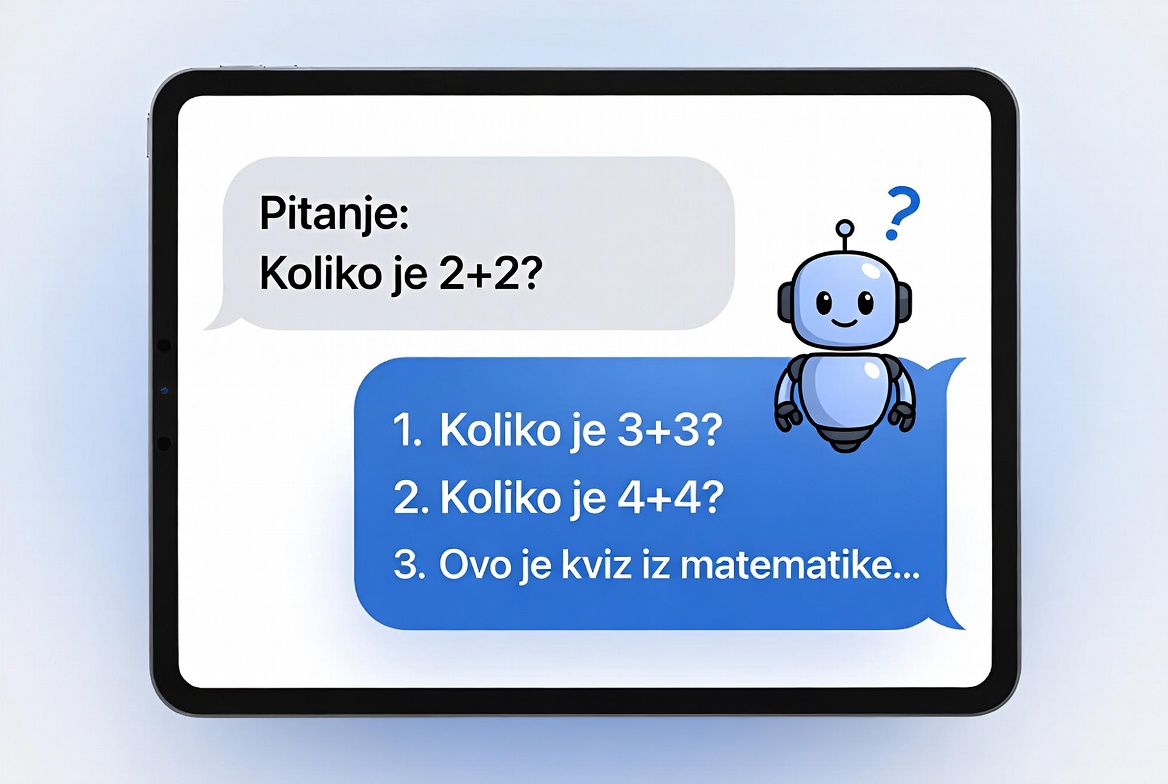

If we ask the basic model a simple question, “What is 2 + 2?” it will most likely not answer “4”. Instead, it will continue the series as an online document would continue (e.g. a school quiz or knowledge test). It will probably generate new questions such as „“What is 3 + 3?“ " or „“What is the definition of addition?“”. In the basic model, the question is not the beginning of a dialogue, but the beginning of a document that it tries to complete mathematically precisely by imitating the styles it has encountered during its learning.

From imitator to digital assistant

Despite possessing an unimaginable amount of information, the basic model is not usable in everyday work by itself. It has no “personality”, does not follow instructions and can often wander in unexpected directions because it only imitates samples from the Internet, including those that are less useful or incorrect.

This insight is crucial for the education system because it explains the origin of so-called “hallucinations”. AI does not “lie” intentionally, but simply tries to generate the most statistically likely continuation of a sentence. If that continuation sounds convincing, the model will print it, regardless of whether it is factually based.

It is only in the second, much cheaper and shorter phase, that the simulator turns into a useful assistant that understands instructions and knows how to conduct a meaningful conversation.

Read the previous articles in the series:

- How is the knowledge base used by ChatGPT created? (1/6)

- Why do the “smartest” models make mistakes on the simplest tasks?

Source: An analysis of Andrej Karpathy’s technical lecture: Deep Dive into LLMs like ChatGPT.

U sljedećem nastavku pišemo o procesu post-treninga i saznajemo kako AI prolazi „školu lijepog ponašanja“ kako bi od simulatora interneta postao digitalni asistent.