The article “Proof or Bluff? Evaluating Large Language Models at the 2025 American Mathematical Olympiad” assesses the ability of current state-of-the-art large language models (LLMs) to solve six challenging proof-based problems from the prestigious 2025 American Mathematical Olympiad (USAMO). Unlike previous mathematical benchmarks that focused on numerical answers, this study assesses the models’ ability to generate detailed, rigorous mathematical proofs similar to those required in real-world advanced mathematics competitions.

This research tested how well the latest AI language models can solve some of the most difficult mathematical problems given to top high school students in the United States. It found that these AI systems are not yet good at writing detailed and correct mathematical proofs, often making errors in logic or calculations. They typically do not realize when they are wrong and cannot be fully trusted without human verification. This shows that while AI has made great progress, it still needs significant improvement before it can handle the most challenging types of mathematical reasoning that humans do.

What did we learn?

Overall poor performance: All LLMs tested struggled significantly on the USAMO problems, scoring an average of below 5 out of 42 points, reflecting a large gap in their mathematical reasoning and proof generation skills despite their success in previous competitions focused on numerical answers.

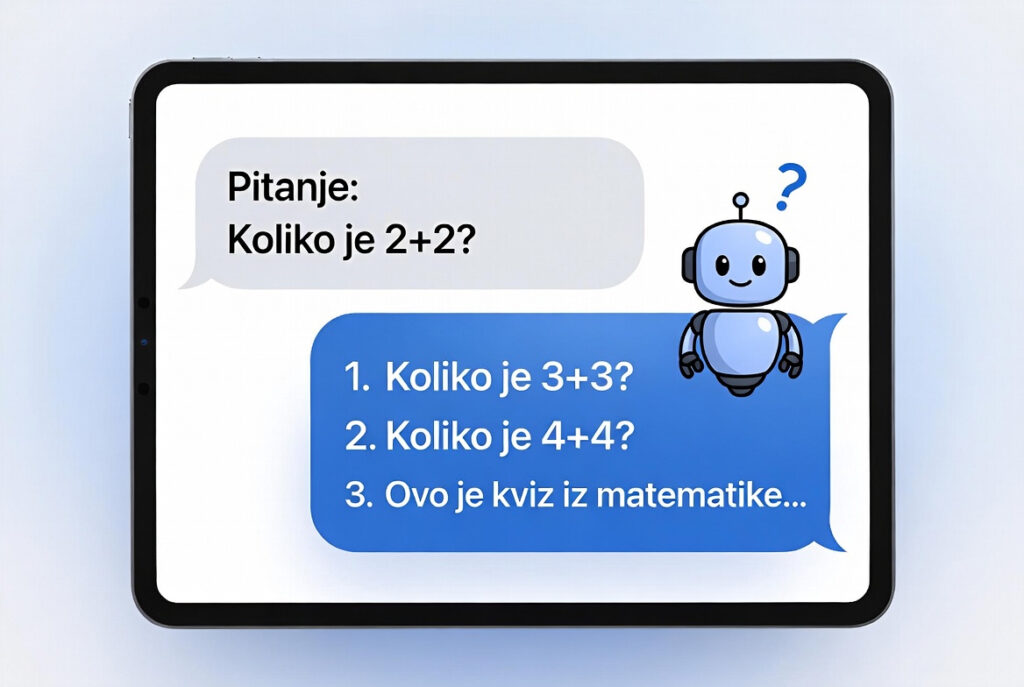

Common failure modes: The models frequently made incorrect logical steps, unjustified assumptions, lacked creativity in exploring alternative solution strategies, and occasionally made algebraic or arithmetic errors. They tended to generalize incorrectly from small examples and sometimes assumed the required final answers even when this was not justified.

Models consistently confident but wrong: Unlike humans who know when they are wrong, LLMs confidently claimed solutions even when they are incorrect, highlighting the challenges in trusting AI for rigorous mathematical problems without human validation.

Mixed quality of solutions: Some models (such as O3-MINI and O1-PRO) generally generated clearer and more structured evidence, while others produced chaotic, disorganized answers.

Difficulties with automated grading: Attempts to use other LLMs to automatically grade solutions have failed, as raters often overestimated the correctness of incorrect answers.