After the first phase of development, which we call "pre-training" (reading the entire internet), an AI model is merely an advanced text simulator. To become a useful assistant that helps us in our daily work, it must undergo a key transformation: Supervised Fine-Tuning (SFT).

Andrej Karpathy offers a precise mental model for understanding this stage: when you use AI, you are not communicating with a "magical intelligence," but with a neural simulation of an average human worker.

How is an AI assistant's "personality" created?

During the fine-tuning phase, the model is no longer trained on vast internet archives. Instead, it uses a much smaller but extremely high-quality dataset consisting of examples of ideal conversations written by humans.

A crucial role is played by human labelers—teams of experts (programmers, writers, scientists) hired by companies like OpenAI to write perfect responses to various prompts. These experts follow detailed instructions, often spanning hundreds of pages, which define exactly how the assistant should sound:

- Be helpful and clear: The response must directly solve the user's problem.

- Be harmless and empathetic: The system must not generate harmful content.

- Budi bezopasan i empatičan: Sustav ne smije generirati štetan sadržaj.

The Process of Imitation: How does the system learn?

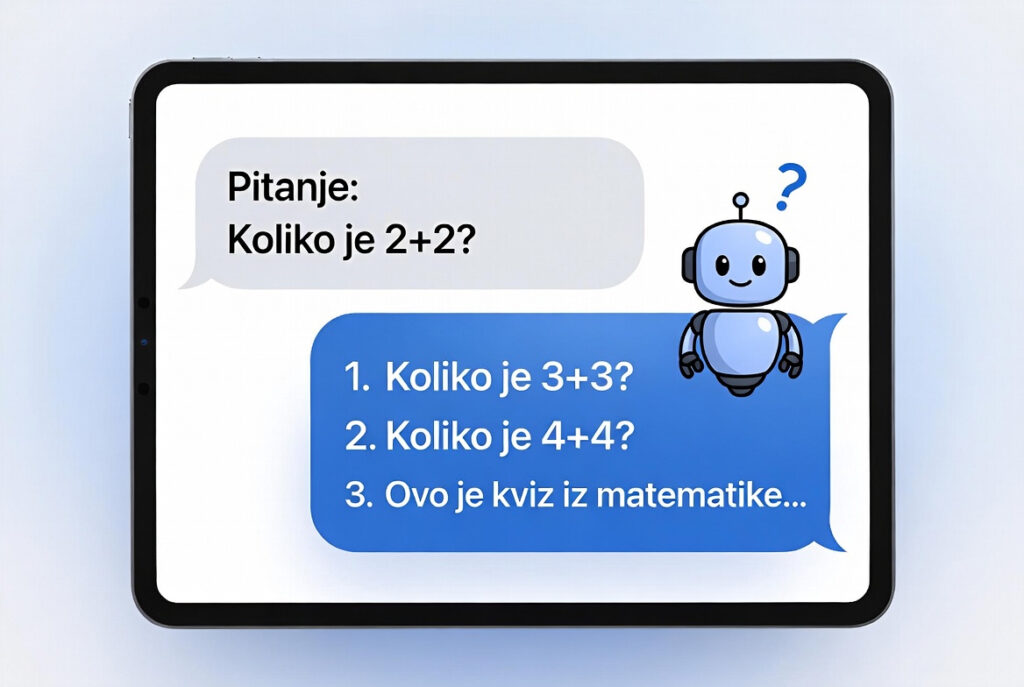

It is important to understand that, in this phase, the AI does not begin to "think" for itself. It actually statistically imitates the style and content of thousands of human responses prepared by experts.

Karpathy explains this directly: the response you receive from ChatGPT does not come from a conscious being, but from a system that statistically mimics human labelers. You are essentially communicating with the "average" knowledge and style of thousands of people who trained the model to speak with a specific voice defined by the corporation.

What does this mean in practice?

When asking artificial intelligence for advice or information, it is useful to keep the following facts in mind:

- It is not absolute truth: You are receiving a response that an average professional worker would write after brief research while following corporate guidelines.

- A reflection of the teacher: The model reflects the attitudes and biases of the people who trained it. It has no value system of its own; it merely mirrors the guidelines of its authors.

- Simulated identity: When an AI writes, "I am a model developed by OpenAI," it does not "feel" this. That sentence is simply part of the data it was trained on or was directly assigned to it as a rule of conduct.

Conclusion: Artificial intelligence is a powerful tool because it carries the condensed knowledge and skills of thousands of experts. Nevertheless, the tool remains a simulation and a mirror of human effort, not an independent thinker.

Read the previous articles in the series:

- How is the knowledge base used by ChatGPT created? (1/6)

- Why do the “smartest” models make mistakes on the simplest tasks? (2/6)

- Can AI know everything and not be able to talk? (3/6)

Source: An analysis of Andrej Karpathy’s technical lecture: Deep Dive into LLMs like ChatGPT.

In the next installment: Why does AI invent data so confidently? We explore the phenomenon of "hallucinations" and learn how to recognize when the system is "bluffing."